B27

Well-known member

No 'Hopeless the Weather Girl' was definitely BBC.Didn’t it used to be the case that ITV used weather models but the bbc used people who were genuine meteorologists?

No 'Hopeless the Weather Girl' was definitely BBC.Didn’t it used to be the case that ITV used weather models but the bbc used people who were genuine meteorologists?

I agree with much in what you say. There was a time when forecasting was entirely subjective. Forecasters were at most airfields, RAF and civil. These forecasters were good at knowing local conditions. Large scale forecasts, production of forecast charts and guidance came from a central office. Skill was not good.I think it is sad that Lustyd has started a really interesting and what might a really beneficial thread and ruined it by his single mindedness.. His thread, his rules I suppose but I thought this was a forum for the benefit of us all.

As for the weather, I wonder how many of you who use tabular weather data such as wind guru and so actually stop and look at synoptic charts and how they evolve over a period of time. At the minute (or yesterday’s weather - not looked at this mornings weather yet) had a slack area of low pressure over Scotland. The wind forecast was slightly different as we flew north clearly showing the challenges in predicting the pressure gradient in such a system. When I cycled home from work, I started in the dry and 300m later the road was soaking wet from a recent shower. That shower might have been triggered by a farmer ploughing a field miles away triggering a cumulus cloud that then formed a CB of sufficient size to cause local wind effects and so on. Weather is reported in probabilities. Aviation forecasts have multiple layers where different weather has different probabilities. All it takes is a slight change for a weather effect to change its level of probability and whether it falls off the forecast altogether. If models are being run constantly then the forecast will change. I think that might be this issue here. Tabular weather data might be running weather probabilities of say 80% and not reporting weather that probabilities are say 40%. A small change in the model might switch the two weathers in the next forecast.

As for Lustyd’s assertion that weather forecasting will change in the 10 years, I am sure that AI will change everything in life. But the problem I foresee is that because we expect weather forecasts to be cheap, we will rely less and less data derived from organic matter. We see fewer and fewer trained and experienced met observers in aviation, replaced by a laser looking straight up and three visibility machines looking across a metre or so of air. In the old days a human would look across the airfield at the fog bank rolling in from the sea and change the weather report, but the machine is telling Exeter that it’s going to be glorious. You’re in data Lustyd, tell me how a future of poorer data will result in better modelling……

Works in Scotland too!Then, of course, there's the Welsh version:

If you can't see across the valley, it's raining.

If you can see across the valley, it's going to rain

It would help if we had some kind of representation of confidence level and what the alternate forecasts and their confidence levels could be. No idea how this could be represented in a meaningful way though.No, you're all wrong

The problem isn't the quality of the modelling, it's the presentation. We take complex models of chaotic states and force them to output a set of single point estimates - if we want better forecasts we need to find better ways of consuming the data.

It is not really quite like that. The models smooth their outputs. The GFS calculates on grid of about 13 km but smoothing means that the data are averaged over an area of around 60+ km. Variations in weather and wind occur on much finer scales leading to complaints that the models are not accurate. If models were judged on average vale’s, they would be much better than is apparently the case. Even the Meteo France AROME can only, at best, provide a forecast ove areas around 5 or 7 km, despite what providers say or imply about revolution.No, you're all wrong

The problem isn't the quality of the modelling, it's the presentation. We take complex models of chaotic states and force them to output a set of single point estimates - if we want better forecasts we need to find better ways of consuming the data.

This is possible. An algorithm could be written or AI used. In a crude way, it is already done in marine forecasts using undefined terminology. A counter argument would be that putting numbers to the occurrence of a gale, for example, would imply precision where it does not exist.It would help if we had some kind of representation of confidence level and what the alternate forecasts and their confidence levels could be. No idea how this could be represented in a meaningful way though.

At one level it would be most likely wind strength, 90% confidence level it won't exceed x, 90% confidence level it won't be less than y, and direction between a-b, with a certain confidence etc.

Could that be done??

That's not really what I mean. Absolutely they smooth and average, but then what comes out is a point estimate for each blob of space and time - ie, in this place at 3pm the wind will be 12 knots from 272 degrees, it'll be 18C and it'll be raining 3mm/hour.It is not really quite like that. The models smooth their outputs. The GFS calculates on grid of about 13 km but smoothing means that the data are averaged over an area of around 60+ km. Variations in weather and wind occur on much finer scales leading to complaints that the models are not accurate. If models were judged on average vale’s, they would be much better than is apparently the case. Even the Meteo France AROME can only, at best, provide a forecast ove areas around 5 or 7 km, despite what providers say or imply about revolution.

I would absolutely love to see this kind of information.We'd have a much better result if the model was allowed to convey not just the level of confidence but also dependencies ("if the wind direction changes by 3pm there'll be heavy weather, if it doesn't then it'll be clear")

If the weather forecast says it will calm and sunny, and it turns out to be blowing a gale and raining, then I am annoyed.This is possible. An algorithm could be written or AI used. In a crude way, it is already done in marine forecasts using undefined terminology. A counter argument would be that putting numbers to the occurrence of a gale, for example, would imply precision where it does not exist.

You make some valid points. You, I and some others in the forum would like to see some indication of reliability of the forecast. I would like to see ensemble grid point values. However, to the majority, particularly among the general public such data would cause confusion. I still think that the smoothing effect would defeat the object.That's not really what I mean. Absolutely they smooth and average, but then what comes out is a point estimate for each blob of space and time - ie, in this place at 3pm the wind will be 12 knots from 272 degrees, it'll be 18C and it'll be raining 3mm/hour.

At best you might get some uncertainty expressed in "percentage chance of rain" but even then you're throwing away a ton of modelled information.

The flip flopping forecast that started the thread is probably an indication that the modelled probability distribution is multimodal - ie, there's a very good chance we'll be in one of two completely different regimes and they're roughly equally likely. On any given run noise is pushing one or the other to be *most* likely.

We'd have a much better result if the model was allowed to convey not just the level of confidence but also dependencies ("if the wind direction changes by 3pm there'll be heavy weather, if it doesn't then it'll be clear")

We all remember the times the forecasters got it wrong, but forget the times they get it right. Also, "Yeah, they promised us a nice F4-5, but we got a 7 off that headland." Well, yes, that happens round headlands. What about the nice F4-5 for the rest of the journey?Memories are selective, so I take some of the claims made in this thread with a rock of salt.

Some good advice there. Whilst we still have human input in marine forecasts, they should be heeded. It is significant that there is still human input into GMDSSh forecasts.It has made it hard this year, especially when cruising with family and not wanting to scare their socks off.

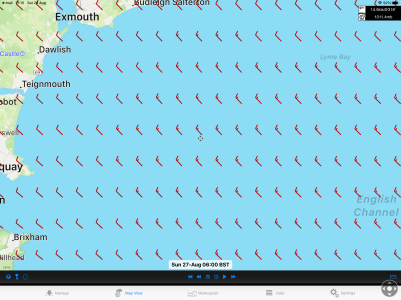

As others have said, I have found checking several weather models and keeping a close eye and ear to the coastguard forecasts during the day has given some clarity of what is 24 to 48 hrs ahead. But I have twice set off in forecast 4-5, then small craft warnings for 6+ issued and fully delivered. A good plan is to also monitor the actual weather and history at any nearby weather buoys (rather than land based).

Never seemed to have this trouble before the Internet....