Yealm

Well-Known Member

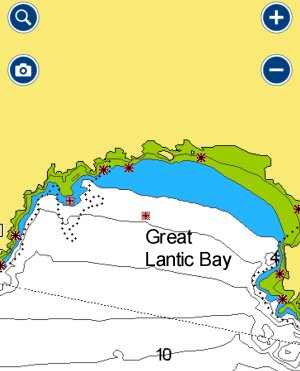

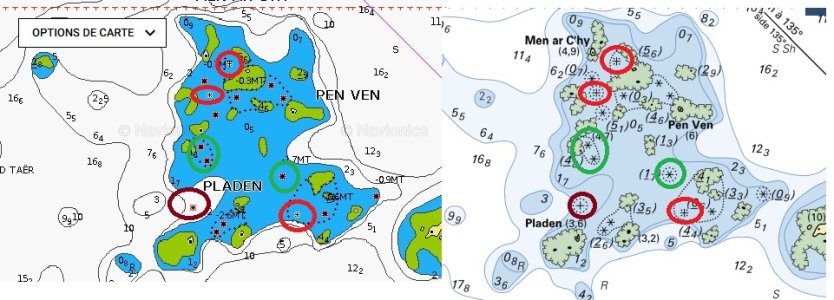

On Navionics (Garmin now) app chart, rocks always underwater are crosses with red hatched background.

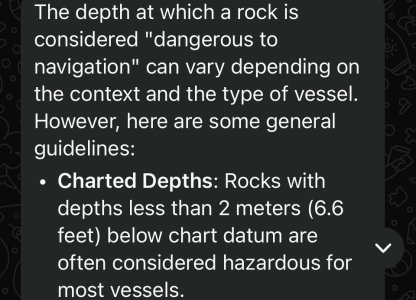

My question is, why doesn’t the app give the minimum depth of water above the rock? So one can know if it’s a ‘bad’ rock or one not to worry about.

Or is there a convention that displayed rocks are always less than a specified depth and always a danger to leisure sailors?

Many thanks

My question is, why doesn’t the app give the minimum depth of water above the rock? So one can know if it’s a ‘bad’ rock or one not to worry about.

Or is there a convention that displayed rocks are always less than a specified depth and always a danger to leisure sailors?

Many thanks

Attachments

Last edited: